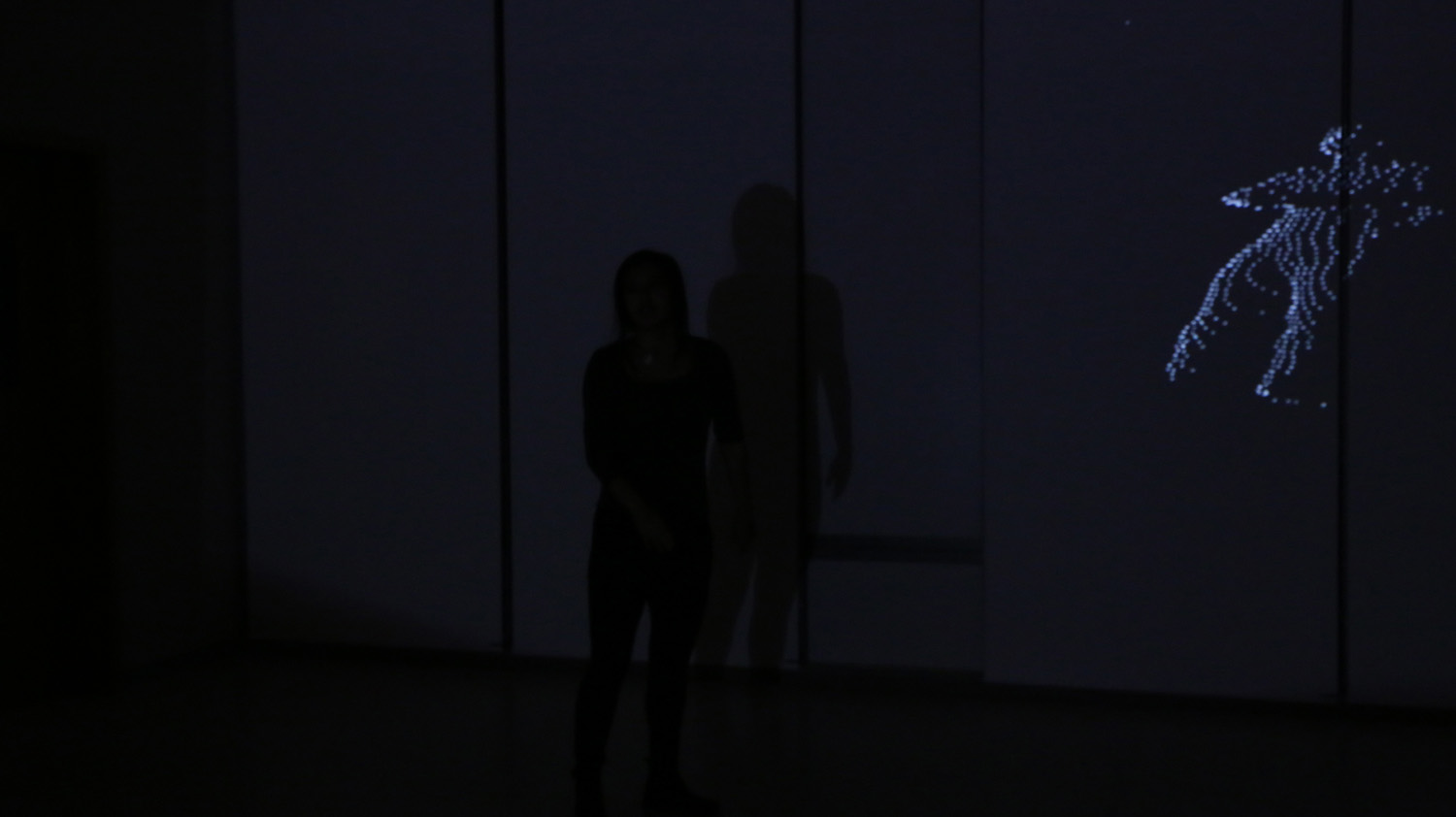

A Song Without Words

Winter 2016

A Song Without Words is an interactive dance piece using Kinect with projection of visuals created according to the body movement sensed by Kinect.

Dancer and choreographer: Ann Yang

Music: Sandy Lam - 无言歌

Filmed by Jingyi Sun, Maggie Walsh and Zhang Zhan

Edited by Zhang Zhan

This dance piece is a visual response to the song, 无言歌, by Sandy Lam. My friend, Ann Yang, a talented dancer, choreographed this dance under my general guideline. There are four stages in the dance performance–birth, accumulation, explosion, and decay. Each stage is represented by different visual effects such as point cloud, particle explosion system, particle ring, and particles collapsing. The main point cloud figure is the live visual resemblance of the dancer, and the dancer also react to the visuals by modifying her dance routines based on what the visual is created by her previous movements and positions.

Calibration

-

Set up Kinect and adjust its detection range to get the best detection with proper depth of the dancer

-

Open Processing sketch to project a solid color image to the wall, and adjust projection area in Resolume to fully cover the area

Phase I - Birth

-

Dancer rises from the floor

-

Particles gather and form a ring around the dancer

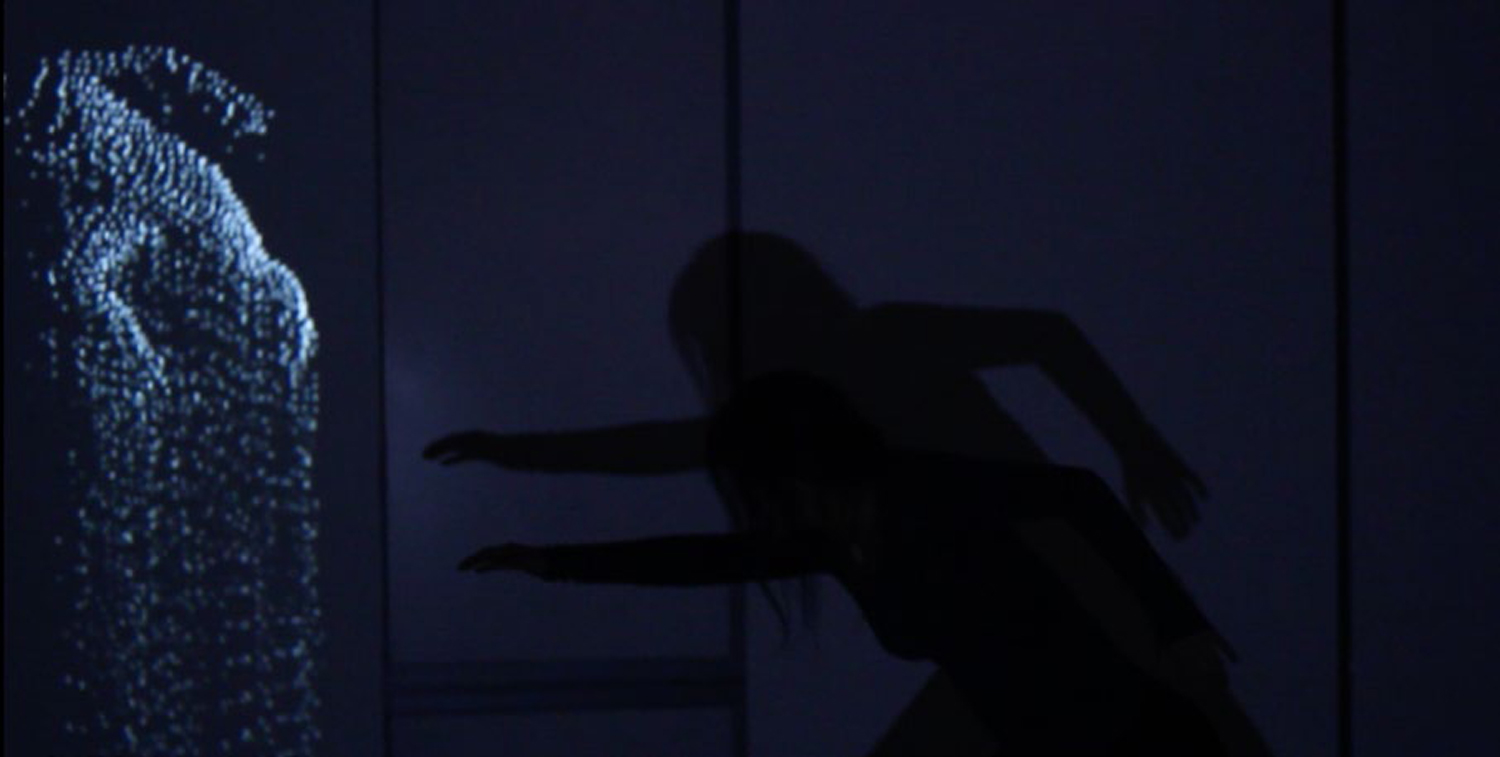

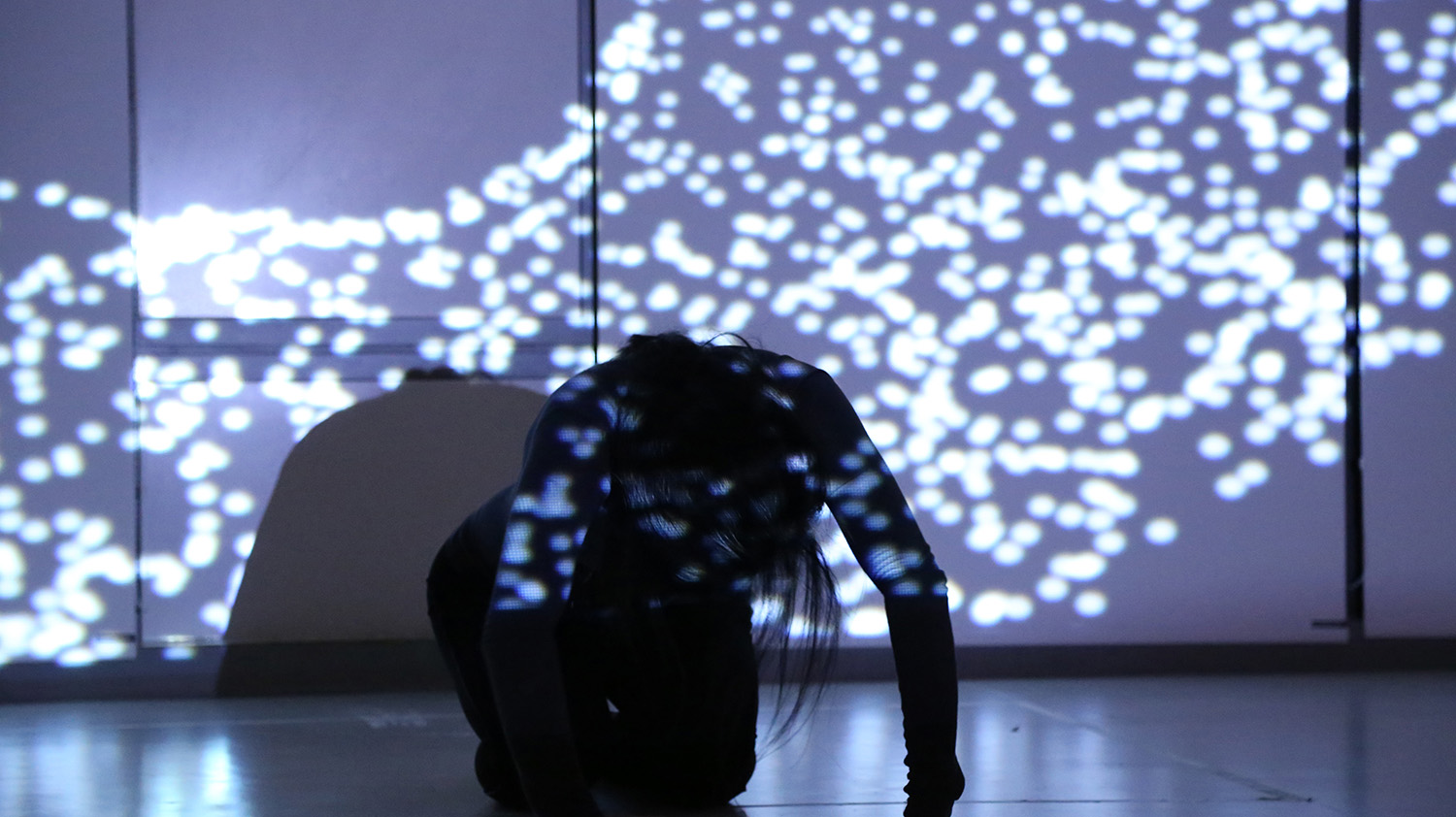

Phase II - Accumulation

-

Dancer’s point cloud image appears

-

Point cloud image starts rotating in the virtual space and the dancer starts dancing to the point cloud image based on the location

-

More particles start falling from the point cloud image

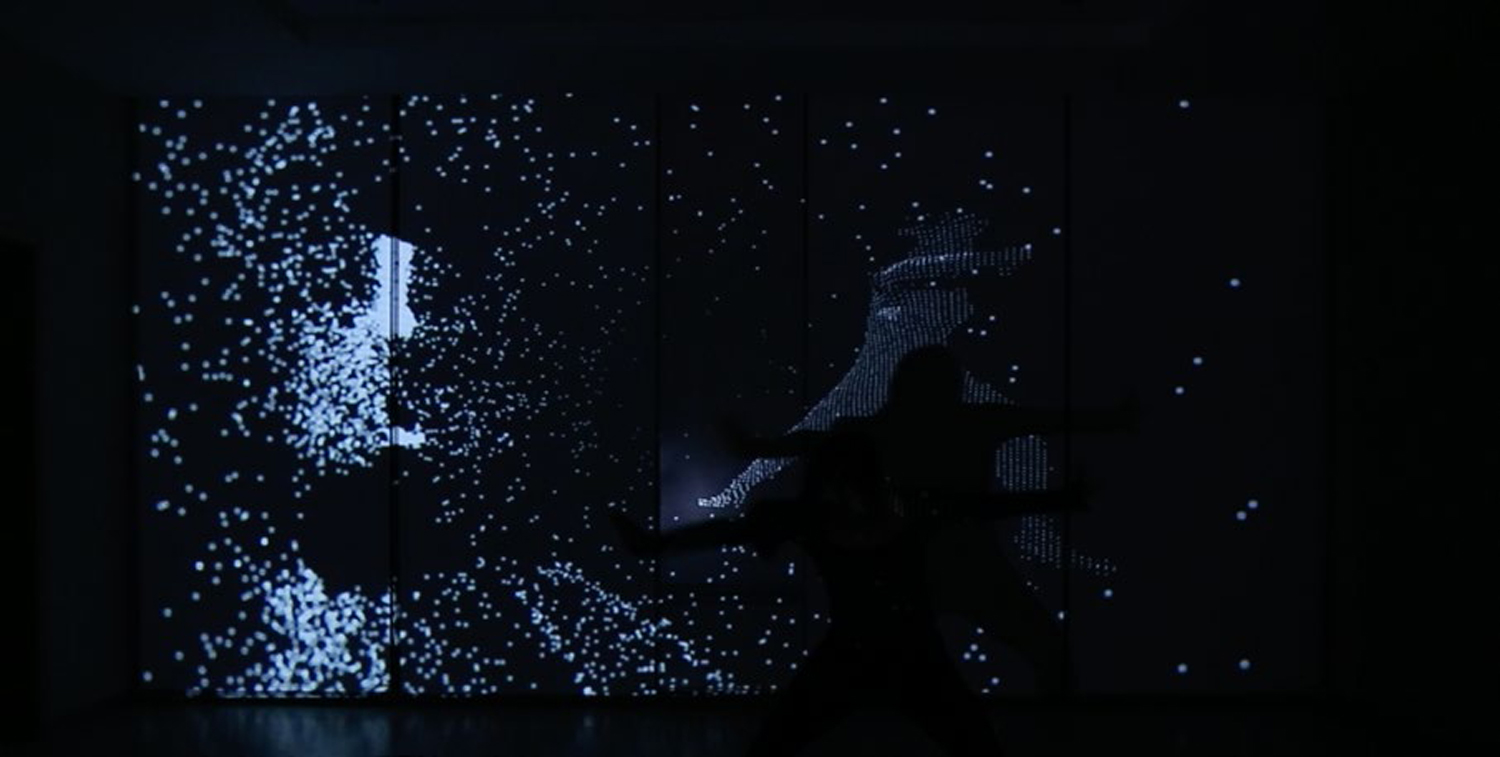

Phase III - Explosion

-

Particles stops falling

-

More randomly allocated particles appear on the surface still

-

Whenever dancer’s closest point(usually foot or hand) hits a group of particles, those particles bounce off immediately at a high speed to create chaos and massive movements

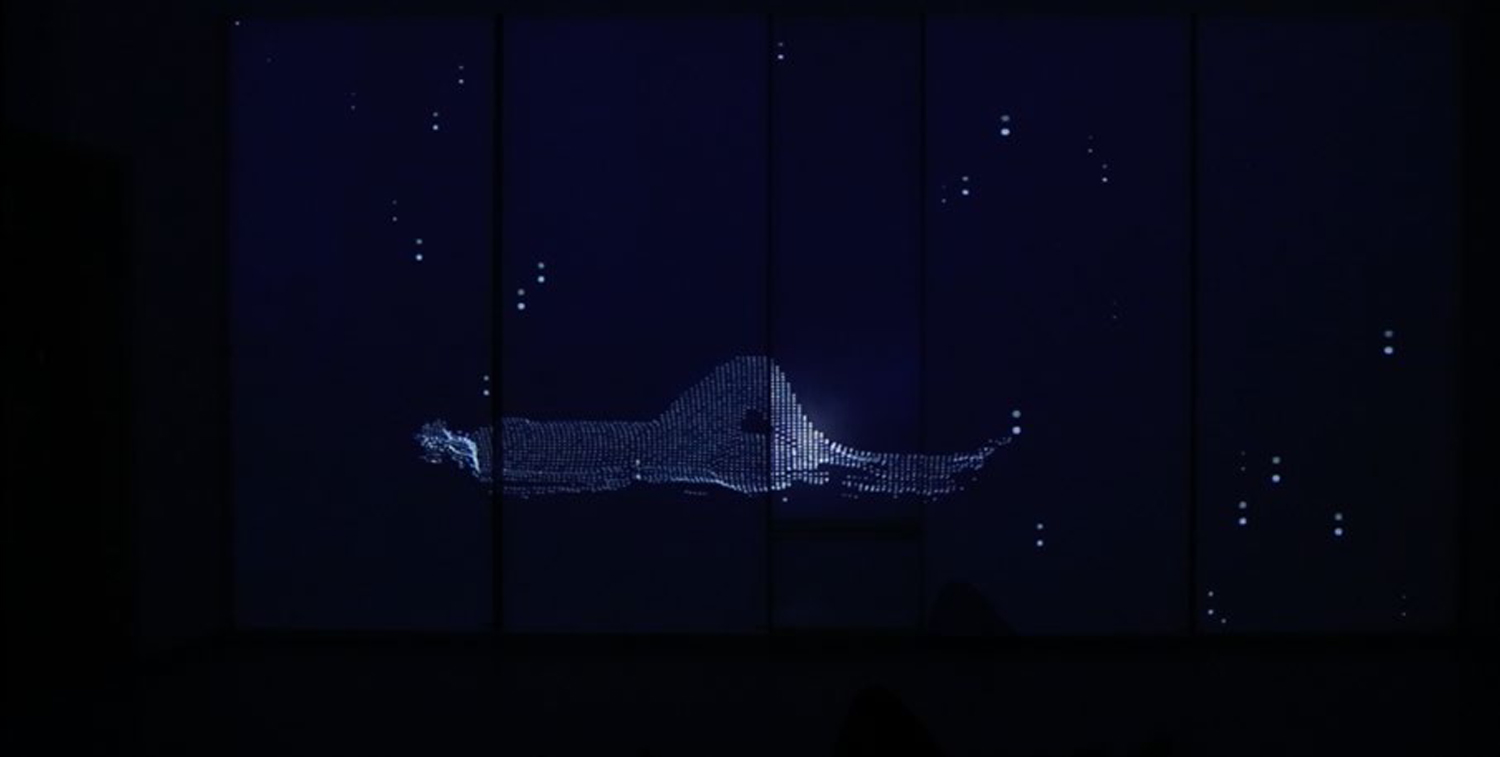

Phase IV - Decay

-

Bouncing particles fade away

-

Dancer get closer to the floor with more floor movements

-

New particles move across the screen from the bottom at a even higher speed to create falling illusion

-

Dancer lies down while raising hand acting as falling

-

Background slightly brightens, and becomes solid white in the end

-

Dancer lies on the floor still

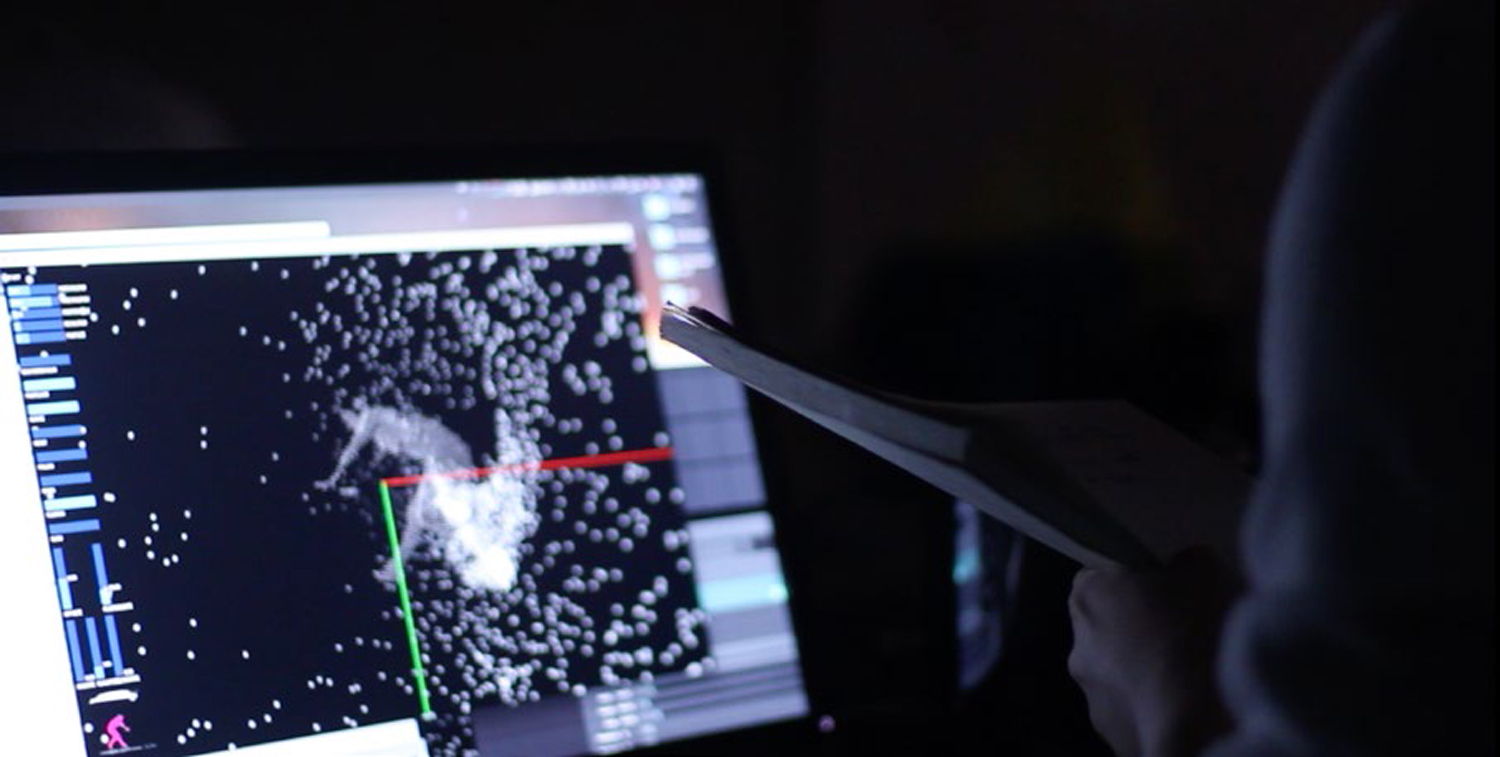

Thanks to Prof. JH Moon for letting me use his Mac Pro, keyboard, track pad, his screen, and the speaker. The visuals are created in a processing sketch with a control panel. Visuals are sent to Arena with syphon, but the control panel is not sent to the Arena so that I can do live control including particle speed, color gradience, transition over the visuals. A Kinect is used to sense the depth and capture the shape of the object within the sensing range. The explosion and particles is attracted by the closest point within the sensing area.

Special thanks to Prof. JH Moon, Jingyi Sun and Maggie Walsh.